SQP

NLP solvers: an overview

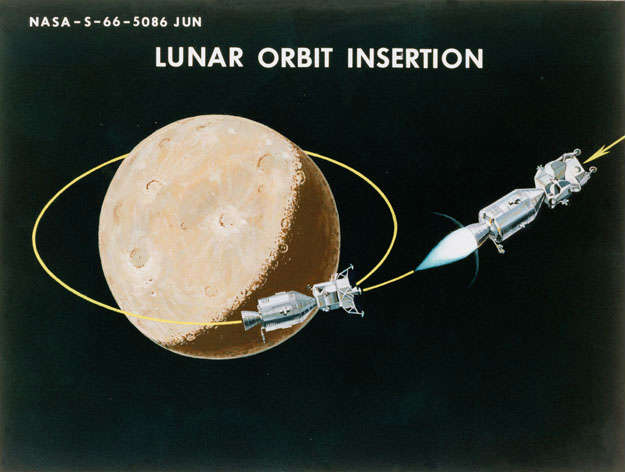

Optimization problems are used to obtain an exact solution (within a certain tolerance) in a number of important scientific applications, such as optimal control of a system driven by a complex differential dynamics, and trajectory optimization of manned space vehicles. In Apollo 11 mission "an iterative steepest ascent computational procedure was used to determine control-variable time histories (angle of attack and roll angle) which yield trajectories satisfying specified initial, in-flight, and terminal constraints while optimizing selected functions of the terminal state" (a very good read and reference here).

So far we have studied techniques to apply the optimization theory to different mathematical problems, that we classified into two categories based on the order of the cost function and the constraints. Nonlinear programming or NLP is a difficult field so it's always convenient to use special cases for study. Note that the meaning of "programming" is "planning", the theory behind was only applied to real algorithms later so the relation with computer programming is purely accidental. Let's see the two categories to classify our problems.

Problems with linear cost function and constraints belong to the category of linear problems and can be solved involving linear programming or LP. There are two different approaches that we studied to solve LP problems, the simplex method, and the interior-point method.

Problems with a nonlinear quadratic cost function and linear constraints are part of the class of quadratic problems and can be solved using quadratic programming or QP. We have studied the sequential quadratic programming method that solves a QP problem at each iterate until it reaches a (tolerable) solution.

Now let's see the solvers and their algorithms in practice. There is a number of different optimal control solvers for NLP problems expressed in the form

But enough theory! Let's have a look at the most famous solvers. The next set of exercises introduces you to some of them!

Exercise 1

IPOPT is the most famous open-source optimization package for large-scale nonlinear optimization. It can be used to address general NLP problems in the form as we described in the equation above. You can download and compile the source on your own, or just download the executable to use with the modeling language (skip here).

Compile IPOPT source code

Internally, IPOPT implements an interior-point line-search filter method, a variation of the interior-point method that we learned in LP. It is particularly suitable for large scale problems with many constraints (with the assumption that the Jacobian matrix of the constraint function is sparse). Take also in mind that the optimizer searches for a local minimum, thus if the problem is non-convex the solution strongly depends on the initial guess.

First, some tools might be necessary

sudo apt-get install gcc g++ gfortran git cmake liblapack-dev pkg-config --install-recommendsLet's download IPOPT and the third-party dependencies

wget https://www.coin-or.org/download/source/Ipopt/Ipopt-3.12.12.tgz

tar xzf Ipopt-3.12.12.tgz -C ~/cd ~/Ipopt-3.12.12/ThirdParty/Blas && ./get.Blas && cd ..

cd Lapack && ./get.Lapack && cd ..

cd Metis && ./get.Metis && cd ..

cd Mumps && ./get.Mumps && cd ..

cd ..; mkdir build; cd buildUse the following commands to configure and install IPOPT

../configure --prefix=/usr/local ADD_FFLAGS=-fPIC ADD_CFLAGS=-fPIC ADD_CXXFLAGS=-fPICmake; sudo make installNow, IPOPT should be installed and compiled. You should find the executable that you will need in the following exercise in the bin subdirectory.

Download the executable

Instead of downloading and compiling the library on your own, you can simply download the executable that you will use in the next example

wget https://ampl.com/dl/open/ipopt/ipopt-linux64.zip

mkdir ~/Ipopt; unzip ipopt-linux64.zip -d ~/IpoptFinally, you should find your IPOPT executable in the ~/Ipopt directory (or ~/Ipopt-3.12.12/build/bin).

Exercise 2

The easiest way to run IPOPT (or any other solver) is to use it in connection with a modeling language, such as Ampl. You can download a free student version. It limits the number of variables and constraints to 300 but for our analysis, it will be enough

wget https://ampl.com/demo/amplide.linux64.tgz

mkdir ~/Ampl; tar xzf amplide.linux64.tgz -C ~/AmplLet's execute and configure Ampl by running the following commands

cd ~/Ampl/amplide.linux64/amplide

./amplideNow you need to add IPOPT path to the IDE so Ampl will recognize it. Go to Window, Preferences, choose Search Path tab on the left, and click Add. Now browse to the directory where your IPOPT source (ipopt) is located (~/Ipopt or ~/Ipopt-3.12.12/build/bin) and restart the IDE.

Alternatively, you can use Ampl as a command-line tool. First, add it to the environment variable, so you can run it directly typing ampl

cd ~/Ampl/amplide.linux64

echo 'export PATH=${PATH}:'$PWD >> ~/.bashrc

source ~/.bashrcOnce everything is working, let's model the following optimization problem (Problem 71 from the standard Hock-Schittkowski collection)

option solver ipopt;

reset;or type the following command if you are using Ampl as a command line tool from the IPOPT executable folder

option solver './ipopt';to declare the variables and define the bounds use

var x1 >= 1, <= 5;

var x2 >= 1, <= 5;

var x3 >= 1, <= 5;

var x4 >= 1, <= 5;to define the cost function

minimize cost: x1 * x4 * (x1 + x2 + x3) + x3;to specify the constraint, and finally solve the NLP

subject to inequality1: x1 * x2 * x3 * x4 >= 25; # inequality constraints

subject to inequality2: 40 <= x1^2 + x2^2 + x3^2 + x4^2 <= 40; # equality constraint

solve;With solve command you will see a lot of statistics on the obtained solution, you can have a look here to know what does the showed data mean with respect to the interior-point algorithm. If you have the string "Optimal Solution Found" at the end of the output, it means the solution has been found.

Let's check if the solution is correct. Type the following command for all the variables \(x_i\;\mathrm{s.t.}\;i\in{1,\dots,4}\)

display x1;and compare the result with the analytic result from Problem 71 here.

Exercise 3

Now it's your turn! Try to model Problem 12.19 from the book and compare it with the one that we solved during constrained optimization module here and Exercise 5 here. Is the optimal solution \(x^*\) the same?

Exercise 4

You are familiar with IPOPT; it's a great solver and it's open-source so you can use and personalize it to your needs. But many times you will encounter the problem of choosing the right solver for your NLPs. So let's have a look at the others (remember always to reset between two different solvers executions in Ampl).

SNOPT is among the most famous commercial solvers. It uses sequential quadratic programming SQP with quasi-Newton approximations of the Hessian (of the Lagrangian). SNOPT is widely used for many problems involving trajectory optimization.

Knitro is a complete solver with a number of different algorithms from interior-point methods (that normally follows a path in the feasible region), to active set algorithms as SQP (that stays at the boundaries).

Try to run Exercise 3 with different solvers and see the difference in the computed solution. For instance, you can switch the solver to SNOPT (included in Ampl) by typing

option solver snopt;or run the modeling environment as a command-line tool from the Ampl directory where the solvers are stored

cd ~/Ampl/amplide.linux64

ampl

option solver './snopt';which one is the best for this problem? Hint: evaluate the goodness of a solver by the accuracy (you should also include other parameters, as execution time, in a real scenario).

Exercise 5

Try to optimize the Example 1 and 2 from the theory slides. What are the solutions?

Appendix

Note that you can use IPOPT (or any other solver that is compatible with the standard modeling languages), in a programming language (C/C++, Python, Matlab), but you must implement following functions to provide IPOPT with the necessary mathematical evaluations

- problem size by defining

get_nlp_info - starting point:

get_starting_point - cost function \(f(x_k)\) and constraints \(c(x_k)\) values:

eval_fandeval_g - the first derivatives \(\nabla f(x_k)\) and \(\nabla c(x_k)\) values:

eval_grad_fandeval_jac_g - the second derivative \(\delta_f\nabla^2f(x_k)+\sum_{j=1}^m \lambda_{k,j}\nabla^2c_{k,j}(x_k)\) value:

eval_h - a mechanism to finalize the solution:

finalize_solution

cd ~/Ipopt-3.12.12/build/Ipopt/examples/hs071_cpp/(to compile just type make and run ./hs071_cpp).